Risk Analysis

Risk Analysis Framework

Introduction

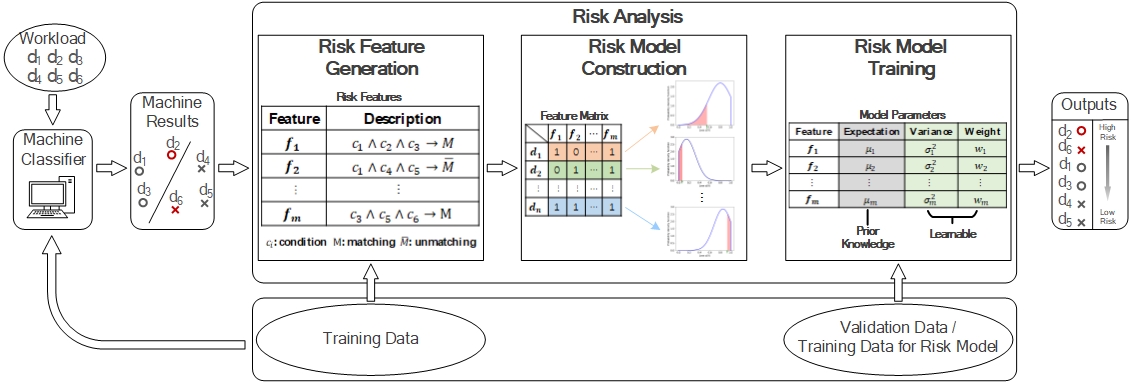

Given a classification task and a trained deep model, risk analysis analyzes and evaluates the risk that the model mislabels a target instance. We have made the following specific contributions:

- We named the task as risk analysis. The name is attributed to financial investment theory, which needs to measure the uncertainty of investment rewards. Instead of using a single value to indicate label status, we propose to represent it by a distribution and use the uncertainty metrics borrowed from financial investment theory to measure its fluctuation risk;

- We introduced the concept of risk features, and proposed a technique based on one-sided decision trees to automatically generate risk features;

- We proposed a learnable risk model, and presented its training techniques;

- We have applied risk analysis to enable quality control for human-machine collaboration on classification tasks.

Our current work focused on entity resolution. The proposed framework and techniques can however be generalized to various classification tasks. Risk analysis is by itself an important and interesting research problem. Moreover, it can have a profound impact on the design and implementation of core machine learning operations, e.g. active selection of training instances, model training and model selection. Therefore, our work opens an interesting and promising research direction.

Applications

Adaptive Deep Learning for Network Intrusion Detection by Risk Analysis. Neurocomputing,2022.

Lijun Zhang, Xingyu Lu, Zhaoqiang Chen, Tianwei Liu, Qun Chen, Zhanhuai Li

[Abstract]

[PDF]

[Code]

[Data]

[Detail]

With increasing connectedness, network intrusion has become a critical security concern for modern information systems. The state-of-the-art performance of Network Intrusion Detection(NID) has been achieved by deep learning. Unfortunately, NID remains very challenging, and in real scenarios, deep models may still mislabel many network activities. Therefore, there is a need for risk analysis, which aims to know which activities may be mislabeled and why.

In this paper,we propose a novel solution of interpretable risk analys is for NID that can rank the activities in a task by their mislabeling risk. Built upon the existing framework of LearnRisk, it first extracts interpretable risk features and then trains a risk model by a learning-to-rank objective. It constructs risk features based on domain knowledge of network intrusion as well as statistical characteristics of activities. Furthermore, we demonstrate how to leverage risk analysis to improve prediction accuracy of deep models. Specifically, we present an adaptive training approach for NID that can effectively fine-tune a deep model towards a particular workload by minimizing its misprediction risk. Finally, we empirically evaluate the performance of the proposed solutions on real benchmark data. Our extensive experiments have shown that the proposed solution of risk analysis can identify mislabeled activities with considerably higher accuracy than the existing alternatives, and the proposed solution of adaptive training can effectively improve the performance of deep models by considerable margins in both offline and online settings.

Selected Publications

Towards Interpretable and Learn able Risk Analysis for Entity Resolution. International Conference on Management of Data (SIGMOD), 2020.

Zhaoqiang Chen, Qun Chen, Boyi Hou, Tianyi Duan, Zhanhuai Li and Guoliang Li

[Abstract]

[Bibtex]

[PDF]

[Code]

@article{chen2019towards,

title={Towards Interpretable and Learnable Risk Analysis for Entity Resolution},

author={Chen, Zhaoqiang and Chen, Qun and Hou, Boyi and Duan, Tianyi and Li, Zhanhuai and Li, Guoliang},

j

ournal={arXiv preprint arXiv:1912.02947},

year={2019}

}

Improving Machine-based Entity Resolution with Limited Human Effort: A Risk Perspective. International Workshop on Real-Time Business Intelligence and Analytics, 2018.

Zhaoqiang Chen, Qun Chen, Boyi Hou, Murtadha Ahmed, Zhanhuai Li

[Abstract]

[Bibtex]

[PDF]

[Technical report]

@inproceedings{chen2018risker,

title={Improving Machine-based Entity Resolution with Limited Human Effort: A Risk Perspective},

author={Chen, Zhaoqiang and Chen, Qun and Hou, Boyi and Ahmed, Murtadha and Li, Zhanhuai},

booktitle={Proceedings of the International Workshop on Real-Time Business Intelligence and Analytics},

series={BIRTE'18},

numpages={5},

year={2018},

doi={10.1145/3242153.3242156},

publisher={ACM},

}

r-HUMO: A Risk-aware Human-Machine Cooperation Framework for Entity Resolution with Quality Guarantees. IEEE Transactions on Knowledge and Data Engineering (TKDE), 2018.

Boyi Hou, Qun Chen, Zhaoqiang Chen, Youcef Nafa, Zhanhuai Li

[Abstract]

[Bibtex]

[PDF]

[Technical report]

@article{hou2018rhumo,

title={r-HUMO: A Risk-aware Human-Machine Cooperation Framework for Entity Resolution with Quality Guarantees},

author={Hou, Boyi and Chen, Qun and Chen, Zhaoqiang and Nafa, Youcef and Li, Zhanhuai},

booktitle={IEEE Transactions on Knowledge and Data Engineering (TKDE)},

year={2018},

doi={10.1109/TKDE.2018.2883532},

publisher={IEEE},

}

Enabling Quality Control for Entity Resolution: A Human and Machine Cooperation Framework. ICDE 2018.

Zhaoqiang Chen, Qun Chen, Fengfeng Fan, Yanyan Wang, Zhuo Wang, Youcef Nafa, Zhanhuai Li, Hailong Liu, Wei Pan

[Abstract]

[Bibtex]

[PDF]

[Slides]

@INPROCEEDINGS{chen2018humo,

author={Z. Chen and Q. Chen and F. Fan and Y. Wang and Z. Wang and Y. Nafa and Z. Li and H. Liu and W. Pan},

booktitle={2018 IEEE 34th International Conference on Data Engineering (ICDE)},

title={Enabling Quality Control for Entity Resolution: A Human and Machine Cooperation Framework},

year={2018},

pages={1156-1167},

doi={10.1109/ICDE.2018.00107},

month={April},

}

A Human-and-Machine Cooperative Framework for Entity Resolution with Quality Guarantees. ICDE 2017.

Zhaoqiang Chen, Qun Chen, Zhanhuai Li

[Abstract]

[Bibtex]

[PDF]

@inproceedings{DBLP:conf/icde/ChenCL17,

author = {Zhaoqiang, Chen and Qun, Chen and Zhanhuai, Li},

title = {A Human-and-Machine Cooperative Framework for Entity Resolution with

Quality Guarantees},

booktitle = {33rd {IEEE} International Conference on Data Engineering, {ICDE} 2017,

San Diego, CA, USA, April 19-22, 2017},

pages = {1405--1406},

year = {2017},

crossref = {DBLP:conf/icde/2017},

url = {https://doi.org/10.1109/ICDE.2017.197},

doi = {10.1109/ICDE.2017.197},

timestamp = {Wed, 24 May 2017 11:31:57 +0200},

biburl = {https://dblp.org/rec/bib/conf/icde/ChenCL17},

bibsource = {dblp computer science bibliography, https://dblp.org}

}